Safety Library

Everything you could ever want to know about safety on Discord. Whether you’re a user, a moderator, or a parent, discover all of our tools and resources and how to use them.

Safety Library

1. Secure your account

Choose a secure password

- A strong password is key to protecting your account. Choose a long password with a mix of uppercase letters, lowercase letters, and special characters that is hard to guess and that you don’t use for anything else.

- We recommend checking out password managers like 1Password (Mac) or Dashlane (Windows), which make creating and storing secure passwords extremely easy.

- Discord will require your password to be at least 8 characters long. Certain regions in the world may have additional requirements.

Consider enabling two-factor authentication (2FA)

- Two-factor authentication (2FA) is the most secure way to protect your account. You can use Google Authenticator, Authy, and other authenticator apps on a mobile device in order to authorize access to your account. Once 2FA is enabled, you’ll have the option to further increase your account’s security with SMS Authentication by adding your phone number to your Discord account.

You can enable 2FA in your User Settings. You can also refer to this article for more information.

.png)

2. Set your privacy & safety settings

Your settings are very important. They give you control over who can contact you and what they can send you. You can access your privacy and safety settings in the Data & Privacy section of your User Settings.

We know it’s important for users to understand what controls they have over their experience on Discord and how to be safer. This article covers settings that can help reduce the amount of unwanted content you see on Discord and that promote a safer environment for everyone.

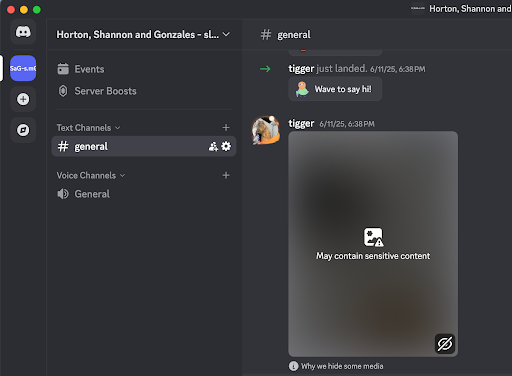

Sensitive media

At any time, you can further configure personal settings to blur or block content in DMs that may be sensitive. The “blur” option in our sensitive content filters applies to all historic and new media. For teen users, by default, Discord will blur media that may be sensitive in direct messages (DMs) and group direct messages (GDMs) from friends, as well as in servers. Adults can opt into these filters by changing their sensitive media preferences in User Settings > Content & Social section. Learn more about how to do this here. For all users, the default configuration is “block” for direct messages (DMs) and group direct messages (GDMs) from non-friends.

At any time, you can also ignore or block the user responsible. You can also report content that breaks our rules.

DM spam filter

Automatically send direct messages that may contain spam into a separate spam inbox.

These filters are customizable, and you can choose to turn them off. By default, these filters are set to “Filter direct messages from non-friends.” Choose “Filter all direct messages” if you want all direct messages that you receive to be filtered, or select “Do not filter direct messages” to turn these filters off.

Direct messages (DM) settings.

- By default, whenever you are in a server with someone else, they can send you a DM. However, you can toggle off the"Allow direct messages from server members" setting to block DMs from users in a server who aren’t on your friends list. When you toggle this setting off, you will be prompted to choose if you would like to apply this change to all of your existing servers. If you click "No," you’ll need to adjust your DM settings individually for each server that you have joined prior to toggling this setting off.

- To change this setting for a specific server, select Privacy Settings on the server’s dropdown list and toggle off the "Allow direct messages from server members" setting.

Friend request settings

Discord also enables you to control who can send you a friend request. You can find these settings in the Friend Requests section of your User Settings.

- Everyone - Selecting this means that anyone who knows your Discord username or is in a mutual server with you can send you a friend request.

- Friends of Friends - Selecting only this option means that for anyone to send you a friend request, they must have at least one mutual friend with you. You can view this in their user profile by clicking the Mutual Friends tab next to the Mutual Servers tab.

- Server Members - Selecting this means users who share a server with you can send you a friend request. Deselecting this while "Friends of Friends" is selected means that you can only be added by someone with a mutual friend.

If you don’t want to receive ANY friend requests, you can deselect all three options. However, you can still send out friend requests to other people.

You should only accept friend requests from users that you know and trust — if you aren’t sure, there’s no harm in rejecting the friend request. You can always add them later if it’s a mistake.

3. Follow safe account practices

As with any online interaction, we recommend following some simple rules while you’re on Discord:

Be wary of suspicious links and files

- DON'T click on links that look suspicious or appear to have been shortened or altered. Discord will try and warn you about links that are questionable, but it’s no substitute for thinking before you click.

- DON'T download files or applications from users you don't know or trust. Were you expecting a file from someone? If not, don’t click the file!

- DON'T open a file that your browser or computer has flagged as potentially malicious without knowing it’s safe.

Never give away your account information

- DON'T give away your Discord account login or password information to anyone. We’ll never ask for your password. We also won’t ask for your token, and you should never give that to anyone.

- DON'T give away account information for any account you own on any platform to other users on Discord. Malicious individuals might ask for this information and use it to take over your accounts.

- DO report any accounts who claim to be Discord staff or who ask for account information to the Trust & Safety team.

Again, Discord will never ask you for your password either by email or by Discord direct message. If you believe your account has been compromised, submit a report to Trust & Safety here.

4. Block other users when needed

We understand that there are times when you might not want to interact with someone. We want everyone to have a positive experience on Discord and have you covered in this case.

How blocking works

- When you block someone on Discord, they will be removed from your friends list (if they were on it) and will no longer be able to send you DMs.

- Any message history you have with the user will remain, but any new messages the user posts in a shared server will be hidden from you, though you can see them if you wish.

How to block a user

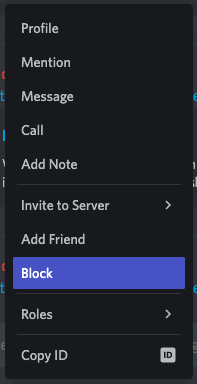

On desktop:

- Right-click the user's @Username to bring up a menu.

- Select Block in the menu.

On mobile:

- Tap the user's @Username to bring up the user's profile.

- Tap the three dots in the upper right corner to bring up a menu.

- Select Block in the menu.

If you have blocked a user but they create a new account to try and contact you, please report the user to the Trust & Safety team. You can learn more about how to do this at this link.

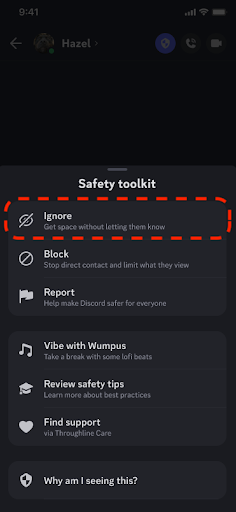

Discover how to use Discord's Ignore feature - a helpful tool that lets you make space from specific users without fully blocking them or letting them know. With Ignore, you can hide new messages, DM and server message notifications, profiles, and activities from those specific users, giving you more control over your experience on Discord.

General Tips to Protect Against Spam and Hacking

- Never click on unfamiliar or unexpected links. If you leave Discord by clicking on a link that takes you elsewhere, it's possible that the external site can access your personal information. We recommend scanning any unfamiliar links through a site checker like Sucuri or VirusTotal before clicking on it. You may also consider running all shortened URLs through a URL expander to ensure you know exactly where you will be directed.

- Never download unfamiliar files from anyone you don't know or trust.

- Be careful about sharing personal information. Discord is a great way to meet new friends and join new communities, but as with any online interaction, protect yourself by only sharing personal information with people you know and trust.

- Discord will only make announcements through our official channels. We do not distribute information secondhand through users or chainmail messages.

If you believe your account has been compromised, submit a report to our Safety team here.

If you’re getting unsolicited messages or friend requests, this article explains how to change your settings.

Spam

Discord uses a proactive spam filter to protect the experience of our users and the health of the platform. Sending spam is against our Terms of Service and Community Guidelines. We may take action against any account, bot, or server using the tactics described below or similar behavior.

Direct Message (DM) spam

Receiving unsolicited messages or ads is a bad experience for users. These are some examples of DM spam for both users and bots:

- unsolicited messages and advertisements

- mass server invites

- multiple messages with the same content over a short period of time

Join 4 Join

Join 4 Join is the process of advertising for others to join your server with the promise to join their server in return. This might seem like a quick and fun way to introduce people to your server and to join new communities, but there’s a thin line between Join 4 Join and spam.

Even if these invitations are not unsolicited, they might be flagged by our spam filter. Sending a large number of messages in a short period of time creates a strain on our service. That may result in action being taken on your account.

Joining many servers, sending many friend requests

While we do want you to find new communities and friends on Discord, we will enforce rate limits against spammers who might take advantage of this through bulk joins or bulk requests. Joining a lot of servers simultaneously or sending a large number of friend requests might be considered spam. In order to shut down spambots, we take action against accounts that join servers too frequently or send out too many friend requests at one time. The majority of Discord users will never encounter our proactive spam filter, but if, for example, you send a friend request in just a few minutes to everyone you see in a thousand-person server, we may take action on your account.Instead of joining too many servers at once, we recommend using Server Discovery to find active public communities on topics you’re passionate about.

Servers dedicated to spamming actions

Servers dedicated to mass copy-paste messaging, or encouraging DM advertising, are considered dedicated spam servers.

Many servers have popular bots which reward active messaging. We don’t consider these to be spambots, but spam messages to generate these bot prompts is considered abuse of our API and may result in our taking action on the server and/or the users who participate in mass messaging. Besides cheating those systems, sending a large number of messages in a short period of time harms the platform.

Invite rewards servers

Invite reward servers are servers that promise some form of perk, often financial, for inviting and getting other users to join said server. We strongly discourage this activity, as it often results in spamming users with unsolicited messages. If it leads to spam or another form of abuse, we may take action including removing the users and server.

Bots and Selfbots

If a bot contacts you to be added to your server, or asks you to click on a suspicious link, please report it to our Trust & Safety team for investigation.

We don’t create bots to offer you free products. This is a scam. If you receive a DM from a bot offering you something, or asking you to click on a link, please report it.

We understand the allure of free stuff. But we’re sorry to say these bots are not real. Do not add them to your server in hopes of receiving something in return as they likely will compromise your server. If anything gets deleted, we have no way of restoring what was lost.

Using a user token in any application (known as a selfbot), or any automation of your account, may result in account suspension or termination. Our automated system will flag bots it suspects are being used for spam or any other suspicious activity. The bot, as well as the bot owner’s account, may be disabled as a result of our investigation. If your bot’s code is publicly available, please remove your bot’s token from the text to prevent it from being compromised.

Hacking incidents, DDoS attacks

If you believe your account has been compromised through hacking, here are some steps you can take to regain access and protect yourself in the future.

1. Reset your password.

- Choose a long password with a mix of uppercase letters, lowercase letters, and special characters that is hard to guess and isn’t used for anything else. We recommend using a password manager which can make creating and storing secure passwords extremely easy.

- If your account’s token has been compromised, reset your password to generate a new token. You should never give your account password or token to anyone. Discord will never ask for this information.

2. Turn on Two-Factor Authentication (2FA)

Two-factor authentication (2FA) strengthens your account to protect against intruders by requiring you to provide a second form of confirmation that you are the rightful account owner. Here’s how to set up 2FA on your Discord account. If for some reason you’re having trouble logging in with 2FA, here’s our help article.

3. DDoS (Distributed Denial of Service) attacks

A distributed denial of service (DDoS) attack floods an IP address with useless requests, resulting in the attacked modem or router no longer being able to successfully connect to the internet. If you believe your IP address has been targeted in a DDoS attack, here are some steps you can take:

- Reset your router via its manufacturer instructions.

- Unplug your modem for 5-10 minutes and then plug it back in. This can cycle your IP address to a new one.

- Contact your internet service provider (ISP) for assistance. Your ISP might also be able to tell you where the attack came from. Please note that Discord does not have this information.

- If you believe this attack is coming from a user on Discord, please file a report with Trust & Safety.

- Please note: Discord never shares your IP address with other users. Bad actors might send malicious links such as IP grabbers or other scams in an attempt to get your IP address. Never click on unfamiliar links and be wary about sharing your IP address with anyone.

Labeling age-restricted content properly on Discord

To help keep age-restricted content in a clearly labeled, dedicated spot, we’ve added a channel setting that allows you to designate one or more text channels in your server as age-restricted.

Anyone that opens the channel will see a notification that it might contain age-restricted content. To access the channel, they'll need to confirm that they are over 18.

Any content that cannot be placed in an age-gated channel, such as avatars, server banners, and invite splashes, cannot contain age-restricted content.

Age-restricted content that is not placed in an age-gated channel will be deleted by moderators, and depending on the rules broken, the user posting that content may be banned from the server.

It's worth mentioning that while having a dedicated place for your age-restricted content is permitted, there is still some material that isn't appropriate anywhere on Discord. For example, content that sexualizes minors is never allowed anywhere on Discord. If you're unsure of what is allowed on Discord, check out our Community Guidelines.

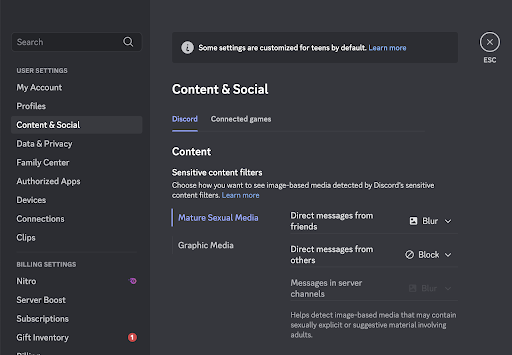

If you’re an adult (18+) and want to customize the sensitive content you see

You can choose to show, blur, or block potentially sensitive image-based media across both filter categories:

- Mature Sexual Media Filter: Detects image-based media that may contain sexually explicit or suggestive material involving adults

- Graphic Media Filter: Detects image-based media that may contain violent or potentially disturbing visual content

Adults can customize their sensitive content filters by changing their preferences in Content & Social settings. There are options to either show, blur, or block image based-media detected by the filters, and each filter (Mature Sexual Media and Graphic Media) has separate controls:

- Direct messages from friends

- Direct messages from others

- Messages in server channels

Default settings for Adults:

- DMs from friends: Show sensitive image-based media

- DMs from non-friends: Block sensitive image-based media

- Server channels: Show sensitive image-based media

Note: In servers, you can only choose to "show" or "blur" image-based media detected by the filters. Blocking is controlled by each server's individual safety settings.

If "Blur" is selected, image-based media detected by the filters where you are chatting will not be shown to you unless you choose to unblur it.

Adults can press on the eye icon to unblur the image-based media, or press the eye icon one more time to hide the media behind a blur again.

For multiple images sent in one message, the blurring will only be applied to the specific images that are detected across either filter category.

.png)

You can learn more about our sensitive content filters here, including the teen user experience.

Our Approach to Delivering a Positive User Experience

The core of our mission is to give everyone the power to find and create belonging in their lives. Creating a safe environment on Discord is essential to achieve this, and is one of the ways we prevent misuse of our platform. Safety is at the core of everything we do and a primary area of investment as a business:

- We invest talent and resources towards safety efforts. From Safety and Policy to Engineering, Data, and Product teams, about 15 percent of all Discord employees are dedicated to working on safety. Creating a safer internet is at the heart of our collective mission.

- We continue to innovate how we scale safety mechanisms, with a focus on proactive detection. Millions of people around the world use Discord every day, the vast majority are engaged in positive ways, but we take action on multiple fronts to address bad behavior and harmful content. For example, we use PhotoDNA image hashing to identify inappropriate images; we use advanced technology like machine learning models to identify and remedy offending content; and we empower and equip community moderators with tools and training to uphold our policies in their communities. You can read more about our safety initiatives and priorities below.

- Our ongoing work to protect users is conducted in collaboration and partnership with experts who share our mission to create a safer internet. We partner with a number of organizations to jointly confront challenges impacting internet users at large. For example, we partner with the Family Online Safety Institute, an international non-profit that endeavors to make the online world safer for children and families. We also cooperate with the National Center for Missing & Exploited Children (NCMEC), the Tech Coalition, and the Global Internet Forum to Counter Terrorism.

The fight against bad actors on communications platforms is unlikely to end soon, and our approach to safety is guided by the following principles:

- Design for Safety: We make our products safe spaces by design and by default. Safety is and will remain part of the core product experience at Discord.

- Prioritize the Highest Harms: We prioritize issues that present the highest harm to our platform and our users. This includes harm to our users and society (e.g. sexual exploitation, violence, sharing of illegal content) and platform integrity harm (e.g. spam, account take-over, malware).

- Design for Privacy: We carefully balance privacy and safety on the platform. We believe that users should be able to tailor their Discord experience to their preferences, including privacy.

- Embrace Transparency & Knowledge Sharing: We continue to educate users, join coalitions, build relationships with experts, and publish our safety learnings including our Transparency Reports.

Underpinning all of this are two important considerations: our overall approach towards content moderation and our investments in technology solutions to keep our users safe.

Our Technology Solutions

We believe that in the long term, machine learning will be an essential component of safety solutions. In 2021, we acquired Sentropy, a leader in AI-powered moderation systems, to advance our work in this domain. We will continue to balance technology with the judgment and contextual assessment of highly trained employees, as well as continuing to maintain our strong stance on user privacy.

Here is an overview of some of our key investments in technology:

- Safety Rules Engine: The rules engine allows our teams to evaluate user activities such as registrations, server joins, and other metadata. We can then analyze patterns of problematic behavior to make informed decisions and take uniform actions like user challenges or bans.

- AutoMod: AutoMod allows community moderators to block messages with certain keywords, automatically block dangerous links, and identify harmful messages using machine learning. This technology empowers community moderators to keep their communities safe.

- Visual Safety Platform: This is a service that can identify hashes of objectionable images such as child sexual abuse material (CSAM), and check all image uploads to Discord against databases of known objectionable images.

Our Partnerships

In the field of online safety, we are inspired by the spirit of cooperation across companies and civil society groups. We are proud to engage and learn from a wide range of companies and organizations including:

- National Center for Missing & Exploited Children

- Family Online Safety Institute

- Tech Coalition

- Crisis Text Line

- Digital Trust & Safety Partnership

- Trust & Safety Professionals Association

- Global Internet Forum to Counter Terrorism

This cooperation extends to our work with law enforcement agencies. When appropriate, Discord complies with information requests from law enforcement agencies while respecting the privacy and rights of our users. Discord also may disclose information to authorities in emergency situations when we possess a good faith belief that there is imminent risk of serious physical injury or death. You can read more about how Discord works with law enforcement here.

Our Policy and Safety Resources

If you would like to learn more about our approach to Safety, we welcome you to visit the links below.

- Safety homepage

- How we enforce our rules

- How we investigate issues on our platform

- Latest Transparency Report

- Latest policy update

- Role of administrators and moderators

- How our Trust and Safety team addresses violent extremism on Discord

1. Let your teen know that the same rules apply online as offline.

- The same common sense and critical thinking they use offline should be used online too. For example, only accept friend requests from people you know. If something doesn’t seem right, tell a trusted adult. Behavior that’s not okay at school is also not okay online.

- Review Discord’s Community Guidelines with your teen and help them understand what is and isn’t permissible on Discord.

- Review their security and privacy settings and the servers they belong to with them so they fully understand who can interact with them on Discord.

2. Talk about what content they see and share online

- Much of our teens’ lives take place online today, including romances.

- Sharing personal images online can have long-term consequences and it’s important for teens to understand these consequences. Help them think about what it might feel like to have intimate photos of themselves forwarded to any number of peers by someone they thought they liked or trusted. Make them aware of the risk of sharing intimate pictures with anyone.

- Review your teen’s content filters on Discord. Have a discussion about who is on their friends list, who they’re messaging, and what information they are sharing about themselves.

3. Set limits on screen time with your teen.

- iOS and Android operating systems offer parental controls that can help you manage your teen's phone usage if needed. Apple and Microsoft offer similar controls for computers.

- If you are worried about how much time your teen spends online, set ground rules - for example, “no social media after 10 PM,” or “no phone at the dinner table.”

4. Try Discord for yourself

- Most of the services that your kids use online aren’t limited to teens. You might find that you also enjoy using them, and might even find new and fun ways to communicate as a family.

- You will also understand how these apps work from the inside, and will be more easily able to talk to your teens about staying safe online.

- You can download Discord and create a Discord account right here.

- We suggest asking your teen to give you a tour of Discord using your new account! Some prompts you can use to get started are:

- Show me how to add you as a friend!

- Show me how to create a server together.

- Show me your favorite features.

- Show me how you stay in touch with your friends.

- Show me your favorite emojis or gifs.

5. Third party resources

Many online safety experts provide resources for parents to navigate their kids’ online lives.

ConnectSafely published their Parent’s Guide to Discord which gives a holistic overview of how your teen uses Discord, our safety settings, and ways to start conversations with your teen about their safety.

For more information from other organizations, please go directly to their websites:

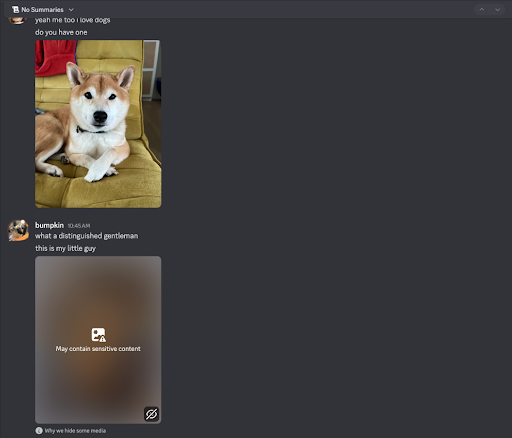

Sensitive Content Filters

Discord offers user settings that can help reduce the amount of unwanted content you see on Discord and promote a safer environment for everyone.

For teen users, image-based media filters are automatically enabled. These filters work to detect image-based media that may fall into two categories:

- Mature Sexual Media Filter: Detects image-based media that may contain sexually explicit or suggestive material involving adults

- Graphic Media Filter: Detects image-based media that may contain violent or potentially disturbing visual content

The filters currently process image-based media posted on Discord and blur or block content when sensitive material is detected. When these filters are set to blur, they apply to all historic and new media.

Discord will block image-based media that may be sensitive in direct messages (DMs) from non-friends and blur that content in DMs from friends, group direct messages (GDMs), and servers for teens. The blur helps prevent teens from seeing potentially inappropriate image-based media.

- In some countries, users can press on the eye icon to unblur the media. Users can always re-blur the unblurred media again if desired by pressing on the eye icon again.

- For multiple images sent in one message, the blurring or blocking will only be applied to the specific images that are detected by the filters.

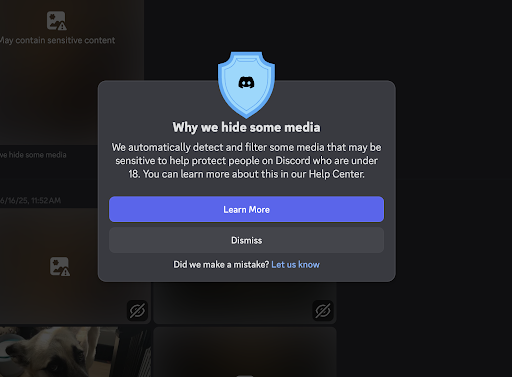

Teens can press on Why we hide some media below the blurred content. Selecting this will show more detail about our content filters and the types of media we detect.

- If teens press on Learn More they will be brought to this article for details on our sensitive content filters.

- In some countries, users can press on Let us know if the media we blurred should not be considered as sensitive. Pressing this will forward that media to our team in order to help us improve our sensitive image-based media detection.

Another way users can let us know if a media is not sensitive is to right-click or long press the media and find the option to Mark as Not Sensitive. By selecting this, users will see the media again with the ability to confirm Mark as not sensitive. This will also forward the media to Discord to help improve our sensitive media detection.

In Content & Social settings, teens can change their preferences for how to handle sensitive media. There are options to either blur or block content for DMs from friends and DMs from others.

- For DMs from friends: The default selection will be set to blur potentially sensitive image-based media for teens. This selection creates the blurred media experience shown above. If the block selection is made, this media will be blocked at upload and not shown at all.

- For DMs from non-friends: The default selection will be set to block sensitive image-based media for teens. This means the media will be blocked at upload and not shown at all.

For teens, image-based media that may be sensitive will be blurred in servers. Server owners may also choose to turn on server-specific safety settings that can block sensitive image-based media from being shared.

You can learn more about our sensitive content filters here.

Direct message (DM) settings

- This menu lets users determine who can contact them in a DM. You can access this setting by going into User Settings, selecting the Privacy & Safety section, and finding the "Server Privacy Defaults" heading.

- By default, whenever your teen is in a server, anyone in that server can send them a DM.

- You can disable the ability for anyone in a server with your teen to send your teen a DM by toggling “Allow direct messages from server members” to off. When you toggle this setting off, you will be prompted to choose if you would like to apply this change to all of your teen’s existing servers. If you click "No," this change will only affect new servers your teen joins, and you will need to adjust the DM settings individually for each server that they have previously joined (in Privacy Settings on the server’s dropdown list).

You can also control these settings on a server-by-server basis.

Friend request settings

- This menu lets you determine who can send your teen a friend request on Discord. You can access this setting by going into User Settings and selecting the Friend Requests section.

- Users should only accept friend requests from users that they know and trust. If your teen isn’t sure, there’s no harm in rejecting the friend request. They can always add that user later once they are sure of who that user is and that they want to connect with them.

You can choose from the following options when deciding who can send your teen a friend request.

- Everyone - Selecting this means that anyone who knows your teen's Discord username or is in a mutual server with your teen can send your teen a friend request. This is handy if your teen doesn’t share servers with someone that introduced them to Discord and your teen wants to let them send a friend request with just your teen's Discord Tag.

- Friends of friends - Selecting only this option means that for anyone to send your teen a friend request, they must have at least one mutual friend with your teen. You can view this in their user profile by clicking the Mutual Friends tab next to the Mutual Servers tab.

- Server members - Selecting this means users who share a server with your teen can send your teen a friend request. Deselecting this while "Friends of Friends" is selected means that your teen can only be sent a friend request by someone with a mutual friend.

If you don’t want your teen to receive ANY friend requests, you can deselect all three options. However, your teen can still send out friend requests to other people.

Direct Message spam filter

This feature automatically sends direct messages that may contain spam into a separate spam inbox.

.png)

These filters are customizable and you can choose to turn them off. By default, these filters are set to “Filter direct messages from non-friends.” Choose “Filter all direct messages” if you want all direct messages that you receive to be filtered, or select “Do not filter direct messages” to turn these filters off.

Ignoring

With Ignore, you can hide new messages, DM and server message notifications, profiles, and activities from those specific users, giving you more control over your experience on Discord.

There are a few different places to find this setting for each user. You will see the Ignore option when:

When Viewing a User’s Profile

- To ignore a specific user, right-click the user’s avatar to open the drop-down menu.

- Select the Ignore option.

- After making your selection, press Ignore in the confirmation pop-up window.

To ignore a user on mobile, tap their avatar, then tap the ellipsis [***] in the upper-right corner > Ignore.

In Safety Tools and Safety Alerts

Safety tools and Safety Alerts warn teen users when we detect potentially inappropriate messages. Teens can choose to select the Ignore option when these tools and alerts appear.

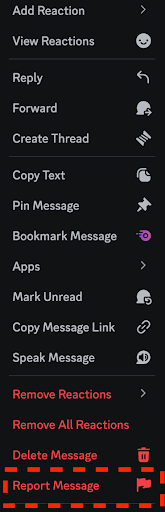

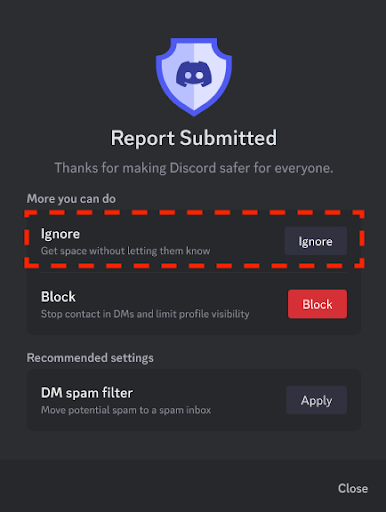

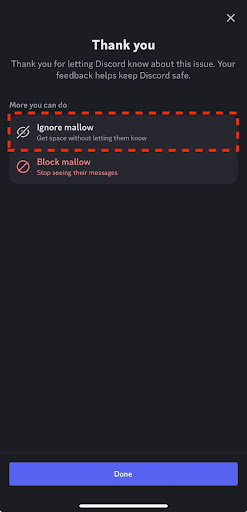

After Submitting a Report

To ignore a user, right-click the message you would like to report and press Report Message. After submitting a report, you will be able to select the Ignore option in the More you can do menu.

On mobile, long press the message you would like to report and tap Report. After the report has been submitted, you will be able to ignore the user.

In a Direct Message

1. To ignore a specific user in a DM, right-click the user’s avatar to open the drop-down menu.

2. Select the Ignore option.

3. After making your selection, press Ignore in the confirmation pop-up window.

On mobile, tap the user in DMs, then select the ellipsis [***] > Ignore and confirm your choice.

Here are additional details on what it means to ignore a user.

Blocking

If someone is bothering your teen, you always have the option to block the user. Blocking on Discord removes the user from your teen's Friends List, prevents them from messaging your teen directly, and hides their messages in any shared servers.

To block someone, they can simply right-click on their @username and select Block.

If your teen has blocked a user but that user creates a new account to try and contact them, please report the user to the Trust & Safety team. You can learn more about how to do this at this link.

Deleting an account

If you or your teen would like to delete your teen’s Discord account, please follow the steps described in this article. Please note that we are unable to delete an account by request from someone other than the account owner.

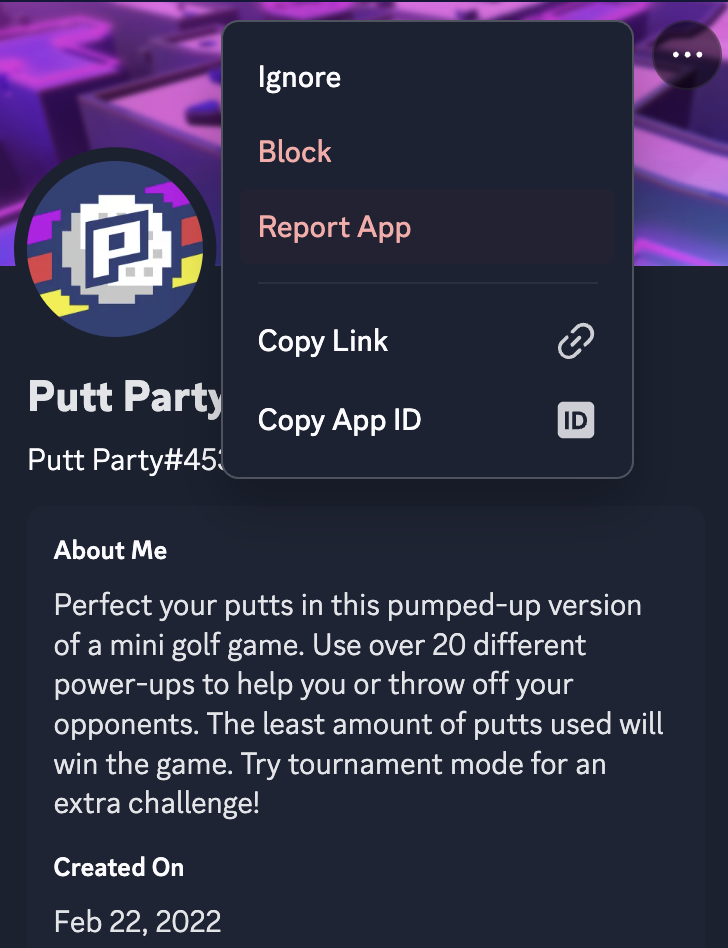

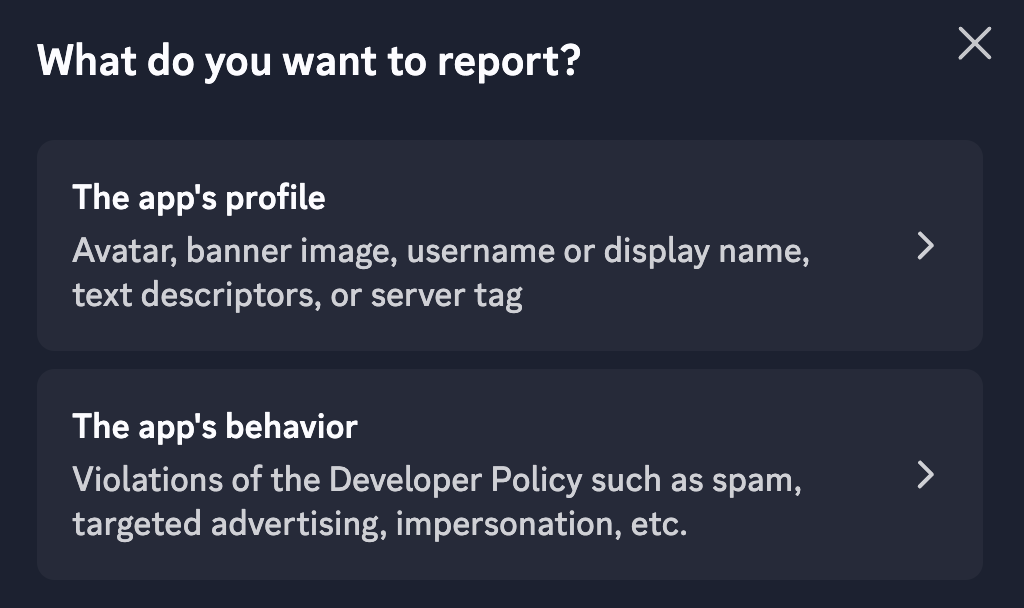

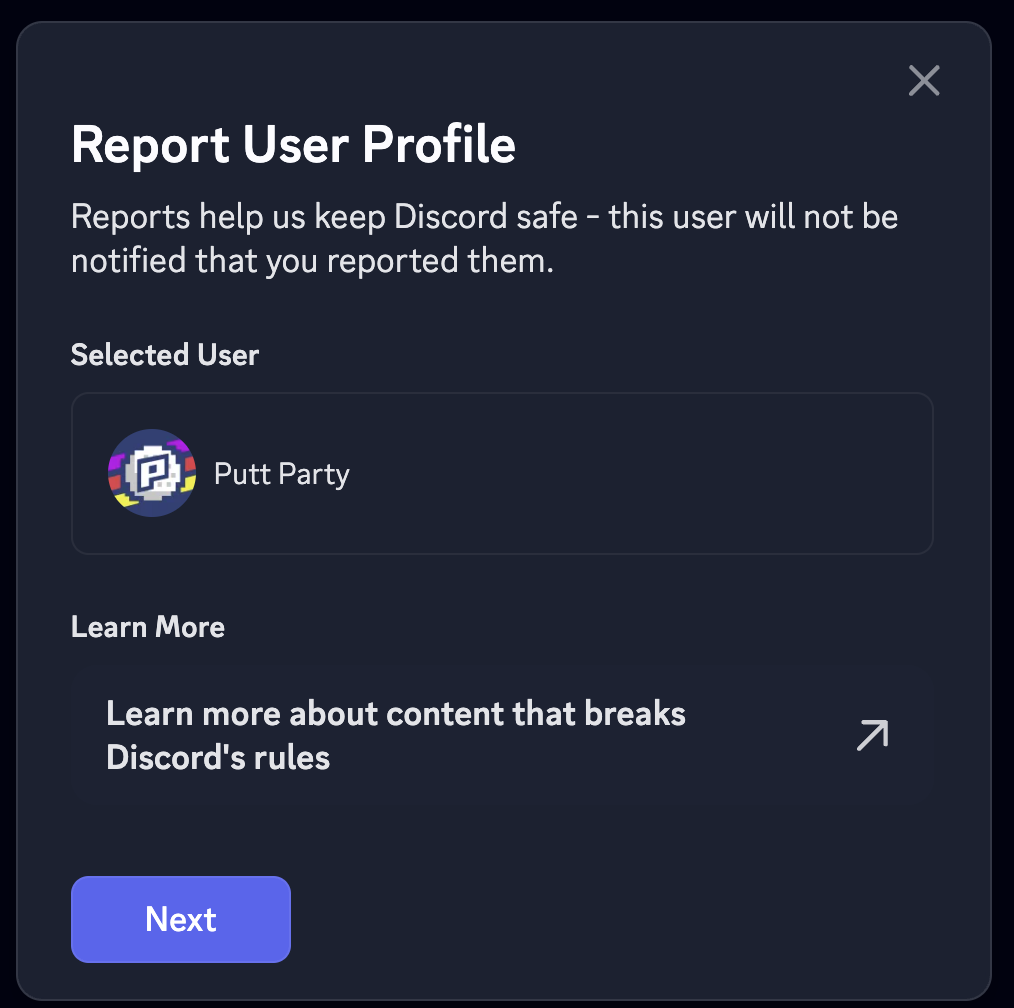

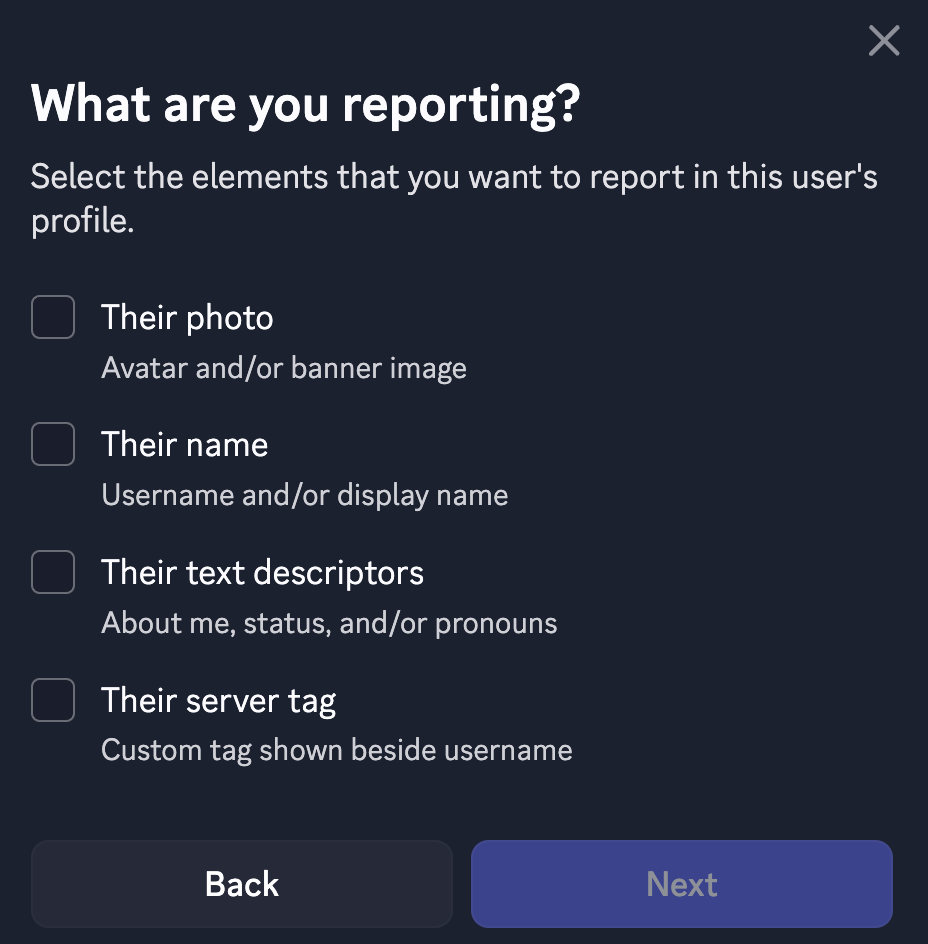

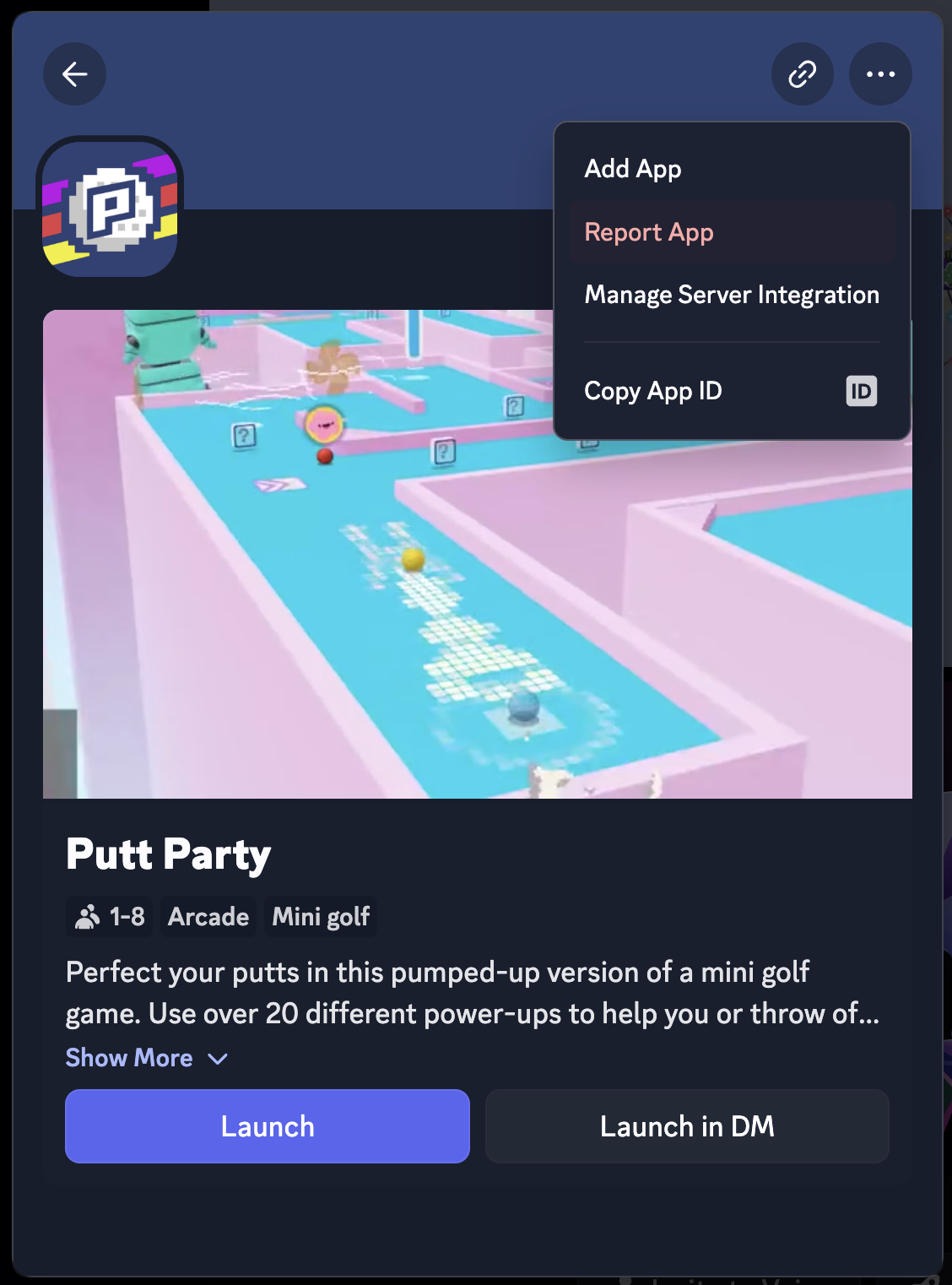

Report an Application via its Profile

- Click into the profile of the Application you wish to report.

- Click on the three dot menu.

- Select “Report App”.

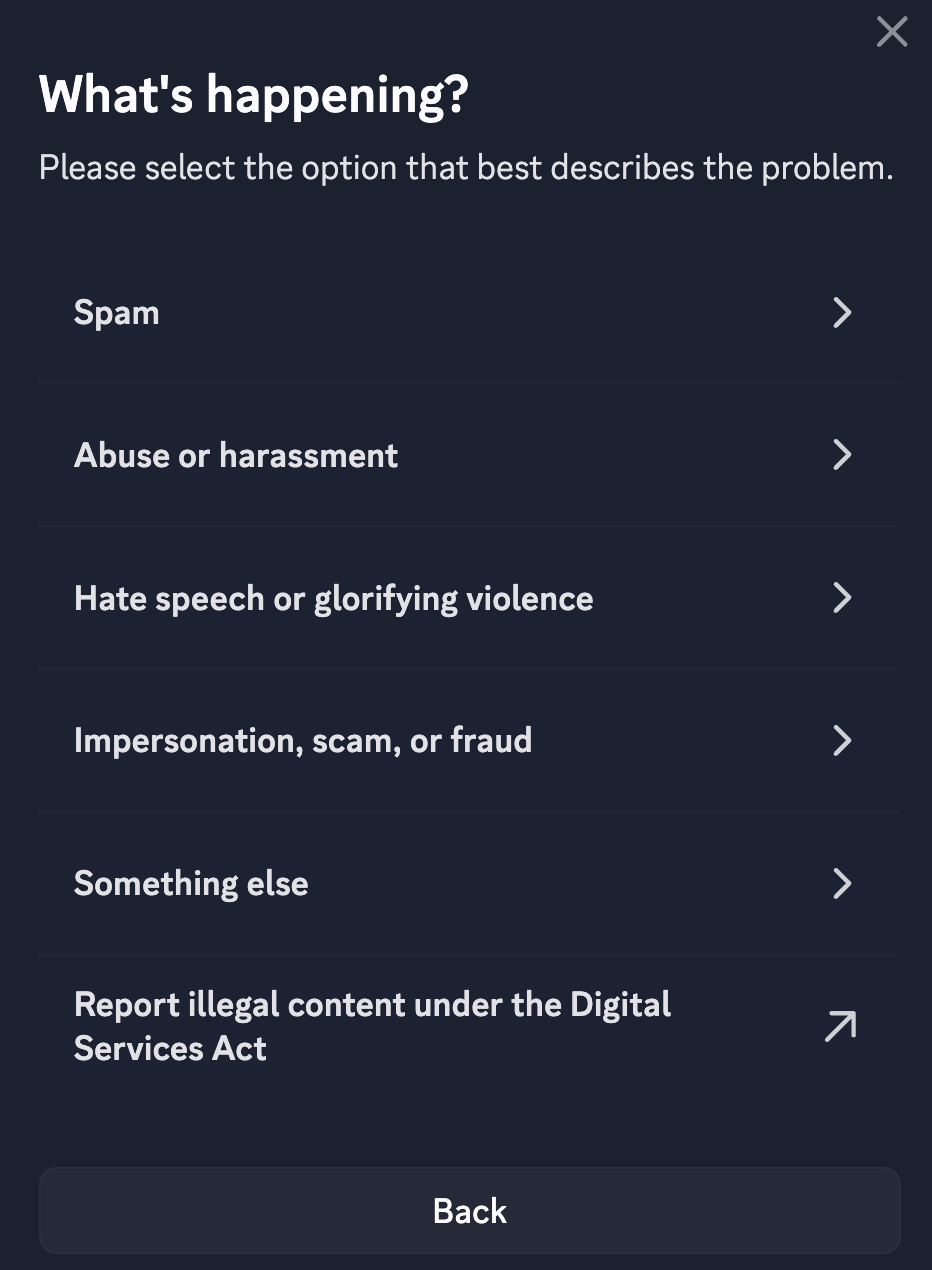

- You can select to report specific elements of the application’s profile by clicking “The app’s profile” or report the app’s behavior by clicking “The app’s behavior”.

- If reporting an Application’s profile, select the element of the profile you are reporting; you can report multiple aspects of a profile at once. Finally, make sure to select the option that best describes the policy violation.

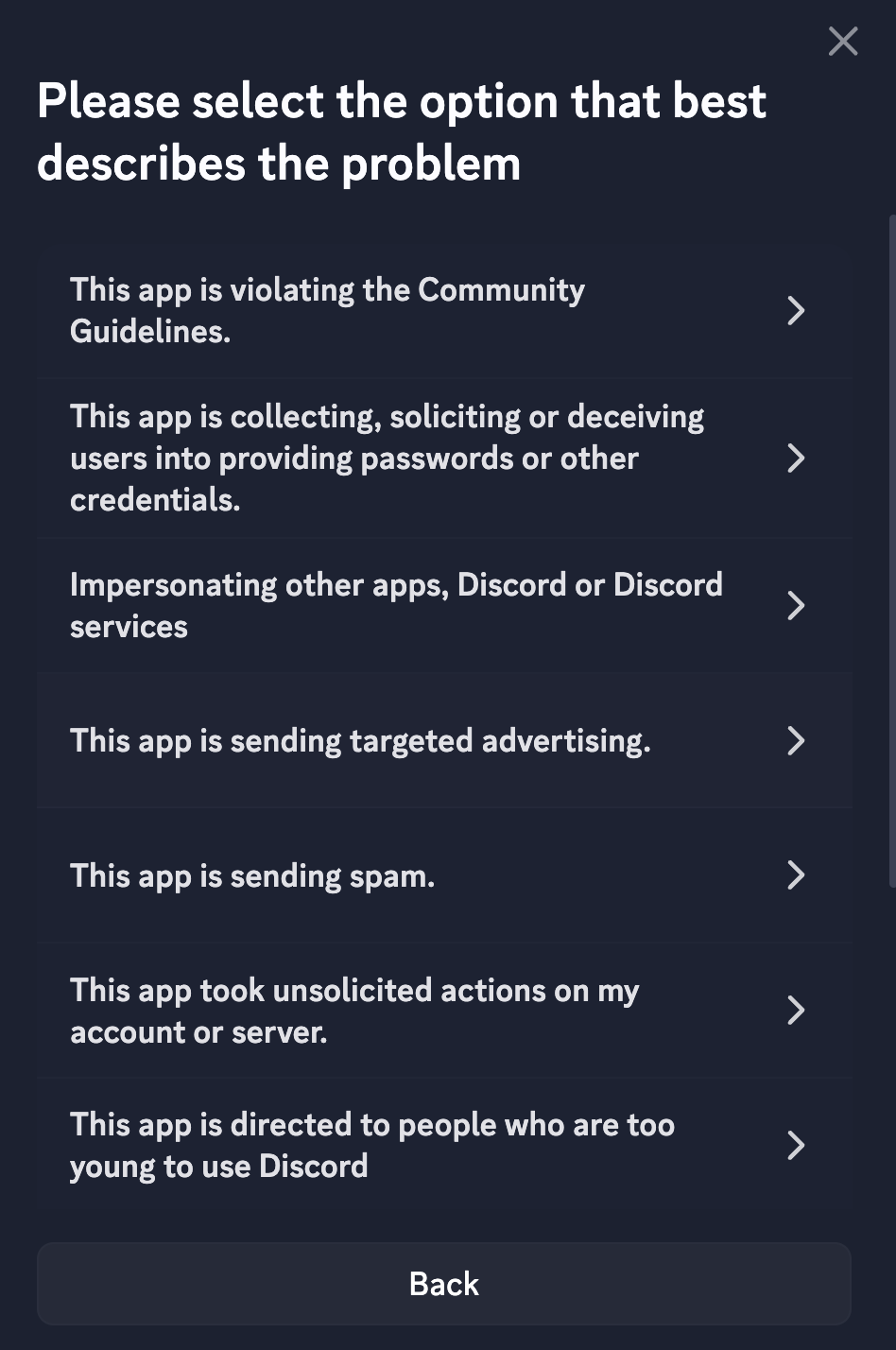

- If you are reporting an Application’s behavior, select the option that best describes the policy violation.

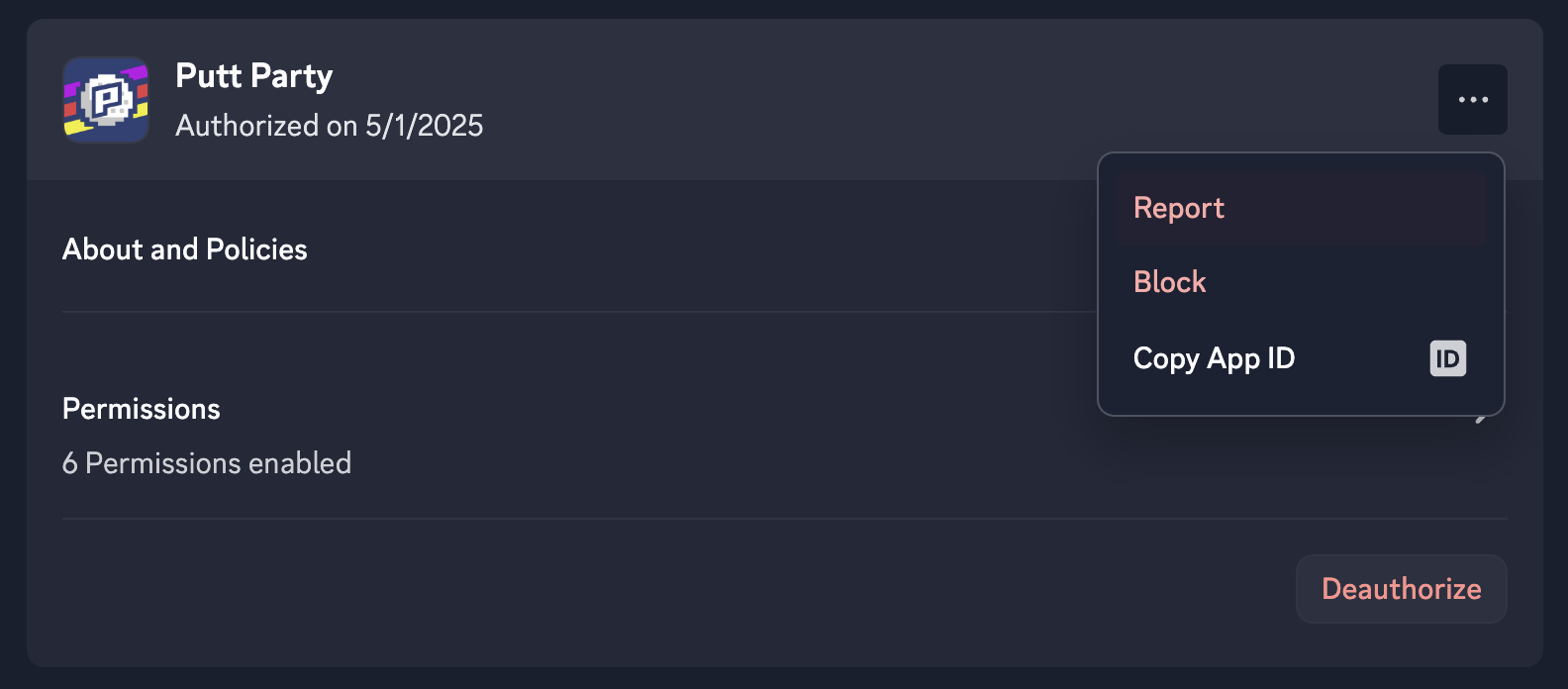

Report an Application via Settings

- Click the gear icon to visit your user settings.

- Click Authorized Apps.

- Select the Application you wish to report by clicking on the three dot menu.

- Select “Report”.

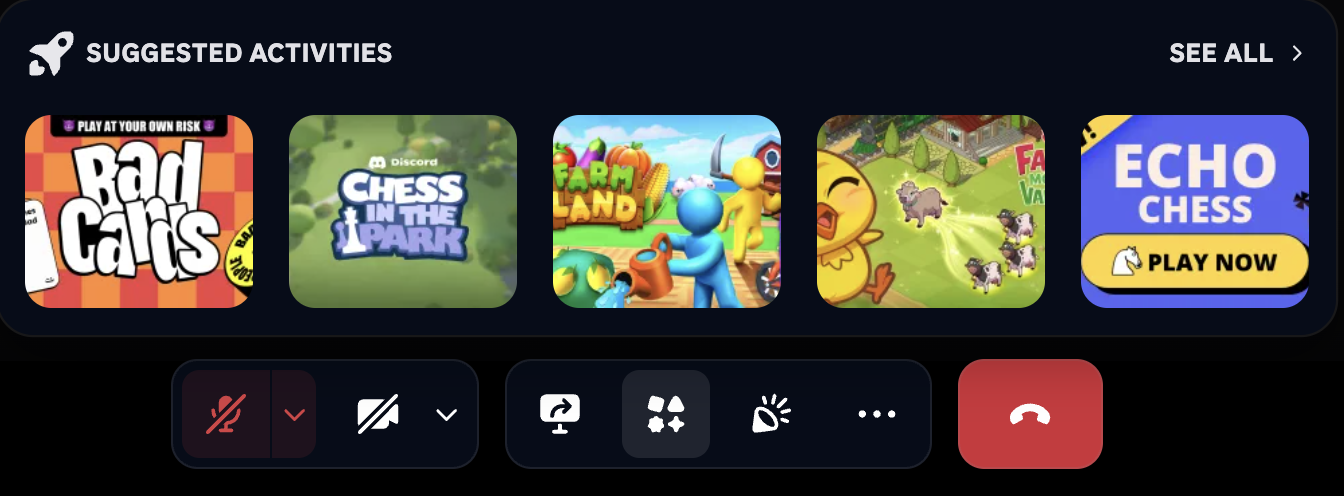

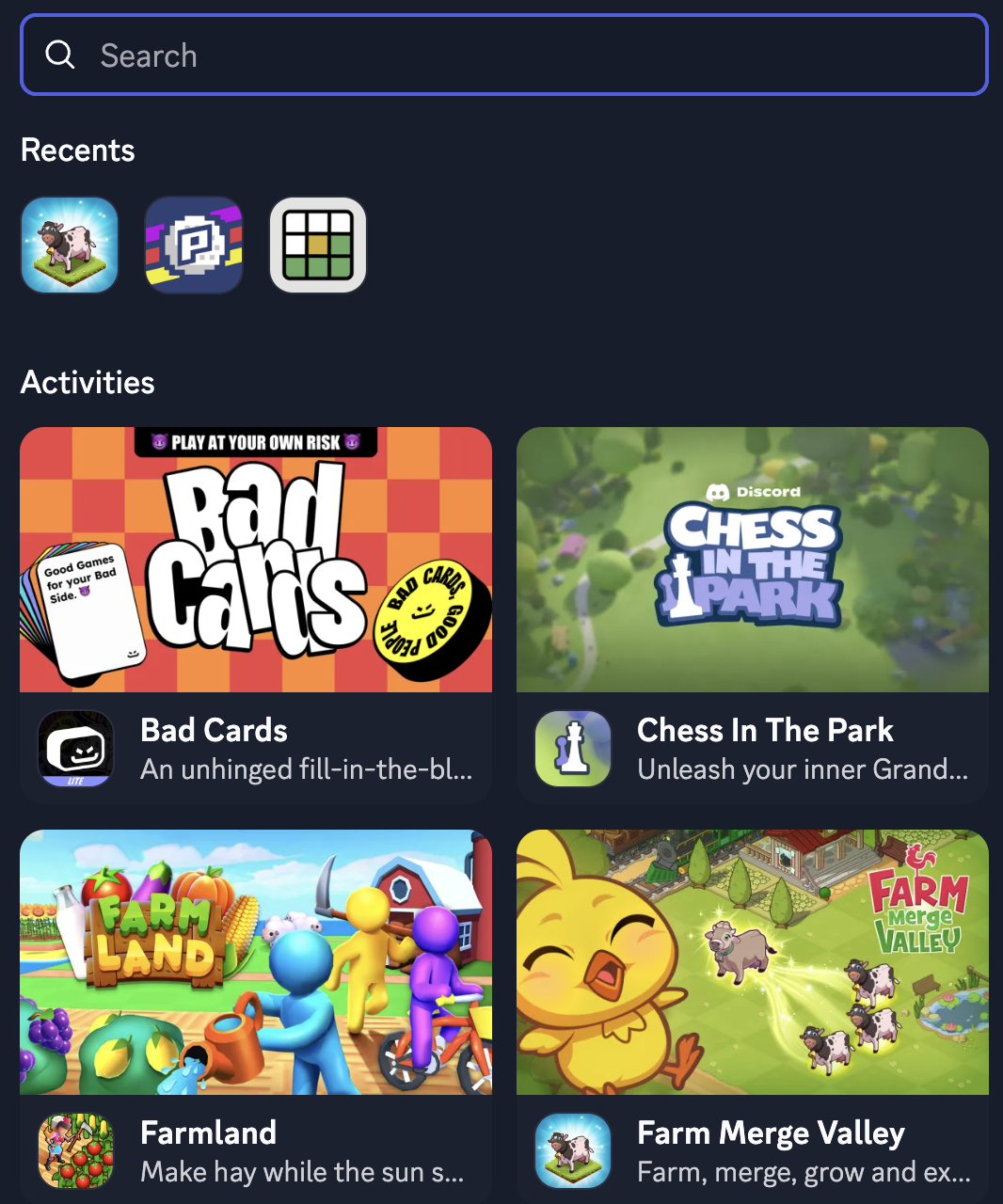

Report an Application via the App Launcher

- While you are in a Voice Channel call, select the App Launcher icon.

- Search for the Activity you wish to report within the App Launcher.

- Click the Activity and click the three dot menu.

- Select "Report App".

- Select the option that best describes the policy violation.

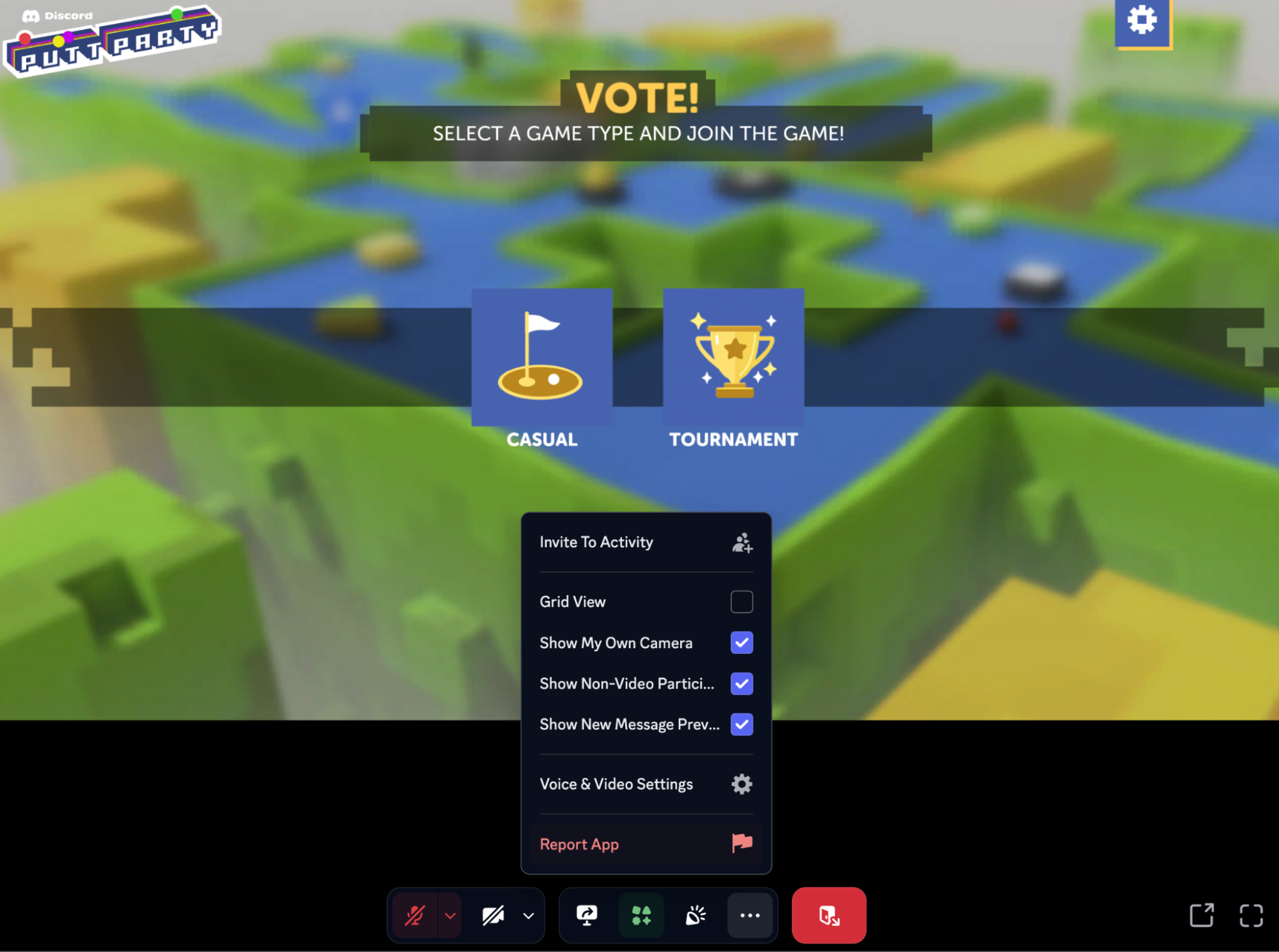

Report an Application Within the Activity UI

- During an Activity session, click on the three dot menu for “More Options”.

- Select "Report App".

- Select the option that best describes the policy violation.

Report Content that Makes An App Ineligible to be Listed Within App Directory

- Select the Discover tile on Desktop or Web.

- Select “Apps”.

- Search for and select the Application you wish to report.

- Once you are on the Application’s page, click the three dot menu.

- Select “Report App”.

- Select “The app’s behavior”.

- Select “Next".

- Select the “This app contains content that makes it ineligible to be listed or discoverable in the App Directory” reporting option.

- You will be directed to sign into your Discord support account in order to submit a report through our support portal.

- You can also report the app for other behaviors that go against our Developer Policy.

Reports Under the EU Digital Services Act

- EU users can report illegal content under the EU Digital Services Act by clicking here or on the Application profile by clicking the three dot menu, selecting “Report App”, selecting “The app’s behavior”, and clicking on “Report Illegal App Activity under DSA.”

- EU government entities reporting illegal content should follow the process outlined here.

- EU users will be required to go through a verification process and follow the prompts to provide details and descriptions for their report.

- If you wish to report a message from an Application under the EU Digital Services Act, a message URL is required. Here’s how to find the message URL for the desktop and mobile apps.

- Desktop App

- Navigate to the message that you would like to report.

- Right-click on the message or press on the ellipses icon when hovering over the message.

- Select Copy Message Link.

- The message URL will be copied to your device’s clipboard.

- Mobile App

- Navigate to the message that you would like to report.

- Hold down on the message to reveal a pull-up menu.

- Select Copy Message Link.

- The message URL will be copied to your device’s clipboard.

- Desktop App

What happens After I Submit a Report?

We use a combination of automated and human-powered review methods to process reports. Report review times may fluctuate due to process changes, volume surges, technical issues, and backlogs.

While not every report identifies a violation of Discord’s policies, your reports help us improve and enhance Discord's safety practices.

When you submit a report, your identity is not shared with the account you reported. This is true even if the report is appealed. We never intentionally disclose the identity of a reporter unless we are legally required to do so. Examples of when this may be the case are if Discord receives valid legal process requiring us to provide the identity of the reporter and requests to remove content under the Digital Millennium Copyright Act (“DMCA”). The DMCA allows the reported user to request further details about the complaint, including the name and email address of the claimant, a description of the allegedly infringed work, and the reported content. In such cases, Discord provides this information in accordance with copyright law requirements.

Social Engineering

Social engineering is a manipulation tactic used by bad actors to trick individuals into divulging sensitive or personal information. The bad actor often poses as a trustworthy entity, offering a seemingly beneficial exchange of information. In its most basic form on our platform, social engineering is manipulating people to give their login credentials to an attacker.

Discord Staff Impersonation

Sometimes attackers try to impersonate Discord staff to gather information. To use this tactic, they hack into Discord accounts, then convince an account’s friends list that they've “accidentally reported them.” They encourage them to reach out to "Discord Employees" to resolve the issue.

These impersonators often copy social media profiles onto Discord accounts, produce fake resumes, and may even claim their staff badges are hidden for safety reasons. The end goal is to trick you into surrendering your account information, paying for their fraudulent services to “undo the report,” and acquiring your financial assets.

Discord Staff will never directly message users on the app for support or account-related inquiries. If someone claiming to be staff asks for personal information, payment, or changes to your login credentials, we recommend that you do not engage further. All Discord users can report policy violations in the app by following the instructions here.

Discord Staff are one of many groups that may be impersonated. Similar actions may occur for other companies as well, so be wary of accounts that may impersonate Support or Safety related questions in other companies too. In general, if you need support at any company, it is wise to go the official source instead.

You can always verify your account standing directly from Discord by going into User Settings > Privacy & Safety > Standing. Learn more about account standing here.

Impersonated Discord DMs

Attackers may also resort to impersonating official Discord responses through user accounts or bots. Typically, these messages include threats to your account standing if you do not comply with their demands. An official Discord DM will never ask for your password or account token, and will always display a staff badge on the profile, as well a system badge which says “Official.’

Malware Tricks

Malware often finds its way onto a device through downloads of malicious files. These files may appear harmless or even enticing—like a game from a friend. But once downloaded and run, they can give bad actors access to your login credentials, email addresses, and even your entire device.

Malicious Links and Fake Nitro Giveaways

Always exercise caution when clicking on links that will take you off of Discord, even when they appear to come from friends or promise rewards like free Nitro.

When you click on a link given to you, a pop up will show that you are leaving Discord and it will display the website you are being redirected to. It is advised to check the link to make sure you are going to the place that is intended.

Reporting Scams

Reporting safety violations is critically important to keeping you and the broader Discord community safe. All Discord users can report policy violations in the app by following the instructions here. Stay vigilant and informed to protect yourself and your digital assets.

Scams go against Discord’s Community Guidelines, and when we see this kind of activity, we take action, which can include banning users, shutting down servers and engaging with authorities. We are committed to reducing scams through technical interventions and continuously invest in safety enhancements and partner with third parties to accelerate our work.

For more information you can read our Deceptive Practices Policy Explainer as well as our Identity and Authenticity Policy Explainer.

Young people find community and identity online

Online connections are a fixture of modern life. One in three US adults report they are online “almost constantly,” and a similar share of teens say they use social media just as much, according to the Surgeon General’s advisory.

It’s probably true that everyone spends too much time on their phones — but that doesn’t mean there aren’t important things happening there. For young people in particular, online spaces can be great for hanging out with friends, building social circles, and forming identity at a key juncture in life.

Technology also removes geographical limits placed on friendships and allows people to find like-minded peers much more freely.

It’s important to have opportunities to connect with people who are into the same things, whether those are passions, causes, or an identity. Coming together online can help people find community, and help build genuine connections.

On Discord, that may happen in servers focused on a favorite sports team, video game, or TV show. Servers can be small and intimate, with just your core friend group who might use it for hanging out and chatting. They can also be larger spaces for more people to share ideas, or organized around identities, including neurodivergence, disabilities, or LGBTQ communities; as well as special interests like music, art, or tech.

For some people, spaces like these offer support and social connection that might be hard to come by where they live.

“Especially as an LGBTQ young person, being able to find a space, be it online or in person, can literally be life-saving if they come from an unsupportive community,” said Patricia Noel, Discord’s mental health policy manager and a licensed social worker. “Just being able to find people who accept you, who use your pronouns — all I can say is that it can be a life-saving experience for young people to be able to come on Discord and to find community.”

More people are talking about mental health

Many young people use Discord servers to support each other as they navigate a difficult stage in life. In servers focused on peer support with mental health, members gather to discuss what they’re struggling with and build community around helping one another.

These spaces created and managed by users aren’t replacements for mental health professionals, but they offer an accessible and reliable space to be heard, Noel said. Young people look to communities of friends for validation and support, and they’re at an age in life when that has a significant impact on their sense of self.

“Young people are really invested in their mental health. They want to be having these conversations,” Noel said. “They're so open about what they're experiencing and feeling, and even the way that they support each other is amazing. They're asking the right questions, they’re so in tune with one another, and they’re such an empathetic group.”

In spaces dedicated to discussing mental health, people may feel more free to share things they aren’t yet comfortable sharing elsewhere. They can show up in a space that’s designed to offer words of validation and affirmation, and offer that same kind of support back to others.

“There’s a lot of research coming out around the benefits of peer support and just being able to talk to somebody who's gone through what you’re going through, or who's just within your same age bracket,” Noel said. “It ends up being beneficial for the person seeking support as well as the person who is the supporter.”

Building positive spaces for online communities

The experience of hanging out with friends is central to Discord.

Servers are all about conversation — text, voice, and video — because that’s how people build genuine connections, in the real world and online. Conversations happen at both the server level and also one-on-one or with smaller groups in Direct Messages, or DMs. We think of this in contrast to social media apps that are built for broadcasting and likes, and driven by algorithms that monopolize users’ attention. When all your time is spent on shallow interactions and endless feeds of other people seemingly living their best lives, it’s hard to feel better or more connected.

Often, the opposite is true: research published in the Journal of Medical Internet Research found that social comparison on digital platforms, where people judge themselves against others they see online, is “a significant risk factor for depression and anxiety.” Experiences of quality interactions and social support online, on the other hand, are related to lower levels of depression and anxiety.

The good news is we know how online communities can bring people together. The internet can still provide new solutions to people’s need for social connection, and Discord is committed to fostering safe, healthy, and supportive spaces on our platform.

“The internet isn’t going anywhere. It's a tool that young people use, and it's going to continue to be a part of their lives,” Noel said. “They want to be part of the solutions. They want to be included when it comes to deciding what happens next.”

1. Keep it simple.

When designing safety alerts, Discord uses plain language, avoids idioms and metaphors, and keeps sentences and words short when possible. This makes alerts easier to understand in the moment, without overloading users with information. This can be particularly useful for users who may be in a heightened emotional state, for example, if they just got a notification that they violated Discord’s anti-harassment policy or they want to report someone else for harassing them.

Our design team tries to put themselves in the teens’ shoes in those moments. To imagine what their emotional state might be at the time. The simpler we can make options for them, the better. We try to make it easier for them to move through the flow so they are clear about what's supposed to happen.

Simple language is timeless. It isn’t trendy or metaphorical. And it translates better across different languages and cultures.

Take for example when a teen receives a DM from a user for the first time and Discord may provide some helpful education about online safety.

2. Guide, don’t reprimand.

With features like Discord’s Teen Safety Alerts, Discord partnered with a leading child safety non-profit Thorn to design features with teens’ online behavior in mind. The goal is to empower teens to build their own online safety muscle—not make them feel like they've done something wrong. So Discord builds teachable moments and guidance into our products, using language that aims to restore the user’s sense of control, without shaming them.

“With teens, because they can't opt out of some of these alerts, we want it to feel more like a guide—a guardrail versus a speed bump,” said Passmore, the lead product designer for the Safety team. “A speed bump is saying, ‘Hey, you're doing something wrong, you need to check yourself.’ But we built Teen Safety Alerts to feel more like guidance.”

This alert will encourage them to double check if they want to reply, and will provide links to block the user or view more tips for staying safe. The goal is to empower teens by providing information that helps them make important decisions to protect themselves.

3. Show that you’re listening and can help them regain control.

When a teen encounters potentially harmful or sensitive content or has a run-in with another user who is acting hostile, they, too, may be in an activated emotional state. They may be angry at the other person, or even at themselves. This is common with cyber-bullying, which researchers say can lead to depression and feelings of low self-esteem and isolation.

Our approach is to meet them in that mindset. This means offering them options and reminding them that they have options like blocking a person or reporting a message. We create a space to acknowledge what they’re experiencing.

It’s also important not to dwell on the upsetting incident. Instead, Discord uses language that aims to move the teen out of that emotional state and into a place of self-awareness. This can help them to calm down and feel more in control.

A users’ mindset matters to Discord. That is why we try and choose our words so carefully to support in the best possible way.

Defining the terms

One of the main challenges for the Platform Policy team is writing the rules for content that isn’t so clearly defined. Riggio notes that for the most severe violations, such as posting illegal material or making an imminent threat of physical harm, the line between what’s allowed and what’s prohibited is bright red. Discord has a strict policy for those types of violations and clear processes in place for removing content and banning the user.

But for content where there aren’t legal definitions or even clear societal ones, like for bullying and harassment, the line is more gray.

Landing on a definition starts with posing a fundamental question, said Riggio: “What is the problem that we’re trying to solve here on Discord?” How the team goes about answering that challenge becomes the guiding force behind each policy.

For bullying and harassment, the team looks at the user experience. We don’t want people to experience psychological or emotional harm from other users.

“The spirit of the policy is focused on individual and community experience and building healthy interactions,” Riggio said. Because ultimately, Discord is a place where everyone can find belonging, and experiencing these feelings hinder people from doing that.

The team did a rigorous amount of research including reviewing other platforms and reviewing guidance from industry groups, including the Global Internet Forum to Counter Terrorism and the Tech Coalition, which was formed so companies could share the best intelligence and resources when it comes to child safety online. They also read the academic literature on what it looks like in society.

They also brought in subject matter experts from different countries, such as academics who study harassment and legal scholars who can offer additional context for how platforms should be thinking about policies and enforcement. These experts provided feedback on early drafts of the policies.

They defined bullying as unwanted behavior purposefully conducted by an individual or group of people to cause distress or intimidate particular individuals, and harassment as abusive behavior towards individuals or groups of people that contributes to psychological or reputational harm that continues over time.

Policies like this one are only as useful if they are enforceable. So the team identified two dimensions that must be taken into account when assessing content.

First, the intent of the instigator. These are signals that the user or the server is posting content deliberately or is intending to cause harm. Intent isn’t always easy to spot, but Riggio said just as in real life, there are signs. For example, a user is directly harassed by someone, or there have been multiple instances of harassment in the past.

“What happens in society happens online. What happens in your high school can sometimes happen on Discord,” she said.

And second, is the content targeted? Is it targeting a specific individual by name? Is it targeting a specific individual by group? Here, there could also be dimensions of targeting users or groups because of certain protected characteristics, such as race or gender, which would then be considered a violation of Discord’s Hate Speech policy.

How this policy covers trolling

The term trolling has many dimensions. It’s been used to normalize “edgy behavior,” Riggio said. “Trolling in and of itself is a complicated term, because some communities that intend to cause harm downplay their behavior with, ‘We're just trolling,’” Riggio said.

Trolling on Discord takes on different dimensions. From "server raids", when a group of users joins a server at the same time to cause chaos, to "grief trolling", when someone attempts to make jokes or degrades the deceased or their next of kin, this type of negative behavior is rooted in disruption and harassment, and is not tolerated on our platform -- no matter how harmless the instigators may think it is.

“I hate the saying ‘sticks and stones may break my bones, but words will never hurt me,’ because words hurt,” said Patricia Noel, Discord’s mental health policy manager who is a licensed social worker and has a background in youth mental health. She collaborates cross-functionally within Discord and with external partners, such as the Crisis Text Line, with the goal of providing tools and resources to teens who may be experiencing mental health challenges.

Why education is as important as enforcement

Part of the enforcement strategy for bullying and harassment is to include a series of warnings, with escalating enforcement actions aimed at educating users, namely teens, that certain behaviors are not OK on Discord.

“This generation is really empathetic. But I also know that there are people who go online just for the sake of going online and being a troll,” Noel said. “Those might be the very same folks who are having problems at home, who are dealing with their own mental health and well being issues. They might not immediately recognize that they are doing harm to someone else.”

Understanding that young people are going to make mistakes is reflected in how the new warning system handles suspensions. For lower harm violations falling under the Bullying and Harassment policy, Discord will test suspensions rather than permanent bans. The idea here is that some teens may need a long pause before coming back to the platform, given how much growth and development occurs during each year of adolescence.

And while there will always be a zero-tolerance policy for the most serious violations, Discord will focus on remediation, when appropriate, for topics like bullying and harassment. This is so teens can have an opportunity to pause, acknowledge that they did something wrong, and hopefully change their behavior.

The challenge is that the behavior is so wide-ranging, said Ben Shanken, Discord’s Vice President of Product. He pointed to a recent analysis by Discord that looked at reports of bullying in large, discoverable servers.

“If you put these examples on an SAT test and asked, ‘Is this bullying? Many people probably would answer the questions incorrectly,” he said, noting how Discord’s large teen user base is inclined to “troll-y” behavior.

In one example, Shanken shared how teens were trolling each other by deceptively reporting their friends to Discord for supposedly being underage when in fact they were not.

It’s trolling. While it’s not nice, it’s also part of how teens joke around. But, instead of simply banning the offender, we build in an opportunity where they can learn from their mistake.

“If we ban a person, and they don't know what they did wrong, they likely won't change their behavior,” said Shanken. “Giving them a warning and saying, here's what you did wrong, flips the script in a more constructive way.

At its core, Discord is a place where people can come together to build genuine friendships. Educating users, especially young people, through our warning system is one part of Discord’s holistic strategy to cultivate safe spaces where users can make meaningful connections.

The nature of being a teenager and navigating relationships can be tricky. There are going to be situations where you hurt people's feelings. There may be moments where users lose their cool and say something really mean or even vaguely threatening,” Riggio said. “We want to build in more of a runway for them to make mistakes online but also learn from them.”

Discord’s remediation philosophy

Our approach to remediation is inspired by restorative justice, a concept from criminal justice that prioritizes repairing harm over punishment. Discord’s Community Guidelines exist to keep people and communities safe, and it’s important to make sure everyone knows how and why to follow them. And still, when people break the rules, most of the time they deserve a chance to correct themselves and show that they’ve learned.

When users break an online platform’s rules, they don’t always do so intentionally. Consider how many different platforms someone might use in a day, each with their own rules and expectations. Discord needs to enforce its own Community Guidelines, while understanding that a user who violates the Guidelines might not even know they had broken a rule in the first place.

“A lot of users have their own ‘terms of service’ that may be different from ours,” said Jenna Passmore, Discord’s senior staff product designer for safety. Admins and moderators set rules, norms, and expectations for their own servers on top of Discord’s Community Guidelines and Terms of Service, and users may not know when they’re breaching the rules of a server or the platform. “If there's a mismatch there, and it’s not a severe violation, we don't want to kick them off the platform.”

Instead, Discord wants to teach people how to stay in bounds and keep themselves, their friends, and their communities safe and fun for everyone. And, to be clear, when it comes to the most severe violations—such as those involving violent extremism and anything that endangers or exploits children—Discord will continue to have a zero-tolerance policy.

Putting users in charge of the outcomes

In most cases, breaking Discord’s Community Guidelines triggers a series of events that are aimed at returning the user to their community with a better understanding of how they should behave.

Education comes first. When Discord spots a violation, it lets a user know what specific thing they did wrong. They’re prompted to learn more, in plain language, about that rule and why it matters. Nobody expects someone to memorize the full Community Guidelines or Terms of Service, but this is the best moment to help them learn what they need to know.

Consequences come next. Any penalties we impose are transparent and match the severity of the violation. A first-time, low-level violation, for example, could prompt a warning, removal of the offending content, or a cooling-off period with temporary restrictions on account activity.

When the action Discord takes to enforce its Community Guidelines takes proper measure of the violation and provides users a path back to good standing, users can often return to their community with a greater understanding of the rules and the need to change their own behavior. If users stay on good terms, similar violations in the future will play out roughly the same way. If they continue to break the rules frequently, the violations stack up, and so do the consequences. The idea is to provide users an opportunity to learn from their mistakes, change their behavior, and therefore stay in communities they know and trust on Discord, rather than pushing users who violate Discord’s Community Guidelines off the platform and to other spaces that tolerate or encourage bad behavior.

“We want users to feel like they have some agency over what's going to happen next,” Passmore said. “We want an avenue for folks to see our line of thinking: You violated this policy, and this is what we're doing to your account. Learn more about this policy, and please don't do this again. If you do it again, here's what's going to happen to your account.”

Banning is reserved for the most extreme cases, and the highest-harm violations result in immediate bans. Otherwise, if someone repeatedly demonstrates that they won’t play by the rules, the consequences become more severe, from losing access to their account for a month, to a year, and eventually—if they continue to violate the Community Guidelines—for good.

Everyone knows where they stand

Remediation depends on users who are able to learn and change their behavior being able to return to good standing. Discord’s account standing meter lets users see how their past activity affects what they’re able to do on the platform.

The standing meter has five levels: good, limited, very limited, at risk, or banned.

“For most folks, it will always be ‘good’,” Passmore said. When it’s not, the hope is that someone will act more thoughtfully so they can return to that good status and stay there. She compared it to road signs that show the risk level for wildfires. “It changes your behavior when you’re driving through a national park and you see that there’s a moderate fire risk,” she said.

Discord’s internal policies outline the severity of each type of violation, the appropriate consequence, and how one type of violation compares to others. These policies help ensure that the employees who review violations of Discord’s Community Guidelines enforce the rules fairly and consistently. That means when someone breaks the rules, employees aren’t deciding punishment on an ad hoc basis. Instead, the outcome is determined by the severity and number of violations.

“Our goal is to help people act better,” said Ben Shanken, Discord’s vice president of product, who oversees teams that work on growth, safety, and the user experience. “People don't always know that they're doing bad things, and giving them a warning can help them to improve.”

Ultimately, we believe that guiding users toward better behavior—and giving them the tools they need to learn—results in a better experience for everyone.

First, we do our research.

Discord’s policy team invests time and resources into understanding the dynamics of the type of behavior they’re writing policies about. “The team does a significant amount of research to understand: What is the phenomenon? What does this issue look like on other platforms? What does the issue look like in society?” said Bri Riggio, the senior platform policy manager who oversees this process.

The policy team reviews scholarly research, data, and nonprofit and industry guidance, among other resources. They bring in external subject matter experts from around the globe, like academics who study online behavior or legal scholars who focus on sexual harassment.

They also do internal research. They interviewed Discord’s Trust & Safety team—the ones who review content for violations—to see examples of what they consider bullying and harassment.

One thing the policy team keeps in mind during this stage is how this research pertains to teens. “Teens are at the forefront of my research—thinking about how we protect young people who are online and making sure they feel safe and comfortable using Discord,” said Edith Gonzalez, a platform policy specialist. She added that she looks for research that addresses parents' concerns when it comes to issues such as cyberbullying.

We identify the problem we’re trying to solve for.

At the heart of each policy, Riggio and her team start by asking the question: What is the problem that we are trying to solve on Discord? She calls this idea the “spirit” of the policy.

“If we are trying to stop users from feeling psychological or emotional harm from other users, if we want them to have a good experience, then we write the policy to try and drive towards that north star,” she said. “It can be a guiding force for us, especially when we get escalations or edge cases where the policy may not be so clear cut.”

For example, if the Trust & Safety team gets a report of content that was flagged for bullying, but the content falls somewhere in a gray area or it’s not clear if the content was intentional, the team discusses if taking action under the policy is actually going to solve the problem or not. Another way of putting it: Does it go against the spirit of the policy? These discussions inform how the policy team can sharpen and better articulate policies over time.

We define the terms and the parameters for enforcement.

Policy specialists like Gonzalez begin listing all the different behaviors that describe the issue. For bullying and harassment, examples might include: sexual harassment, server raids, sending shock content, and mocking a person's death. “Once I write down all the criteria, I expand each behavior,” said Gonzalez. “How do you define a violent tragedy? How do you define mocking? How do you define unwanted sexual harassment? Slowly, the policy starts off as a list, and then I keep expanding it.”

For this policy, we define bullying as unwanted behavior purposefully conducted by an individual or group of people to cause distress or intimidate, and harassment as abusive behavior towards an individual or group of people that contributes to psychological or reputational harm that continues over time.

Riggio points out the significance of the sustained behavior. It’s an acknowledgment that context matters. For example, what may be friendly banter and joking between individuals could be misconstrued as harassment, not taking into account that friends sometimes joke around with each other. “We would be potentially over-enforcing the policy with users who are just having fun with each other.”

We index on educating users who we know are going to make mistakes.

Research and experience tell us that people don’t always know the rules, and when appropriate, they need an opportunity to learn from their mistakes. Discord built a warning system that helps educate users, especially teens. (Important note: The warning system does not apply to illegal or more harmful violations, such as violent extremism or endangering children. Discord has a zero-tolerance policy against those activities.)

“There's a perception that teens will roll their eyes at a rule, or they don't like rules, but the reality is, teens really like it when there are clear rules that resonate with them,” said Liz Hegarty, Discord’s global teen safety policy manager.

Once they learn the rule, they might slip up again, so the policy team built in several opportunities to educate teens that bullying isn’t allowed. These show up in the form of a series of time-outs, versus a flat-out ban after a few strikes, as is common on other platforms.

“It’s teen-centered,” said Hegarty. “It's this idea of: how can you educate as opposed to just punishing teenagers when they're making mistakes?”

This, too, reflects the spirit of the Bullying and Harassment policy, because ultimately, Discord wants to empower people to build meaningful relationships on the platform.

“The spirit is intended to get at the various behaviors that we know make users feel uncomfortable,” said Riggio. “That’s the behavior that impacts feelings of safety and the culture of a community.”

Moderation across Discord

All users have the ability to report behavior to Discord. User reports are processed by our Safety team for violative behavior so we can take enforcement actions where appropriate.

Discord also works hard to proactively identify harmful content and conduct so we can remove it and therefore expose fewer users to it. We work to prioritize the highest harm issues such as child safety or violent extremism.

We use a variety of techniques and technology to identify this behavior, including:

- Image hashing and machine-learning powered technologies that help us identify known and unknown child sexual abuse material. We report child sexual abuse and other related content and perpetrators to the National Center for Missing & Exploited Children (NCMEC), which works with law enforcement to take appropriate action.

- Machine learning models that use metadata and network patterns to identify bad actors or spaces with harmful content and activity.

- Human-led investigations based on flags from our detection tools or reports.

- Automated moderation (AutoMod), a server-level feature that empowers community moderation through features like keyword and spam filters that can automatically trigger moderation actions.

In larger communities, we may use automated means to proactively identify harmful content on the platform and enforce our Terms of Service and Community Guidelines.

If we identify a violation, we then determine the appropriate intervention based on the content or conduct and take action such as removing the content or suspending a user account. The result of our actions are conveyed to users through our Warning System.

Creating a safe teen experience

In addition to all the measures we've outlined above, we add layers of protection for teen users.

Our proactive approach for teens has additional layers of safe keeping because we believe in protecting the most vulnerable populations on Discord. That means we may default teen users into certain features such as the Sensitive Media Filter, or into specific settings. In some cases, we may prevent teen users from selecting certain settings options at all.

For teens, we also may monitor the content or activity associated with teen accounts to identify possible situations of unwanted messages through Teen Safety Assist. For example, if we detect potentially unwanted DM activity directed at a teen account, we may send a safety alert in chat to give the teen account information about how they might handle the situation. (For messages between adults, we do not proactively scan or read the content by default.)

Together we are building a safer place for everyone to hang out. To stay up to date on our latest policies check out our Policy Hub.

What do we mean by safety?

When we talk about protecting users, we’re talking about preventing them from anything that could cause harm. That includes things like harassment, hateful conduct, unwanted interactions from others, inappropriate contact, violent and abusive imagery, violent extremism, misinformation, spam, fraud, scams, and other illegal behavior.

It’s a broad set of experiences, but each of them can affect someone’s life negatively in real ways. They also degrade the overall experience of the community where they happen.

In addition to keeping users safe, we also need them to feel safe. That’s why we talk openly about our work, publish safety metrics each quarter, and give everyone access to resources in our Safety Center.

A culture of safety starts with the people building the technology

Discord’s commitment to safety is reflected in its staff and the way we build our products.

Over 15% of our staff works directly on the team that ensures our users have a safe experience. We invest time and resources here because keeping users safe is a core responsibility: It’s central to our mission to create the best place to hang out online and talk to friends.

Our safety work includes experts from all parts of the company, with a wide range of backgrounds. These experts are engineers, product managers, designers, legal experts, policy experts, and more. Some have worked in technology, but also social work, teen media, human rights, and international law. Building a diverse team like this helps ensure we get a 360-degree view on threats and risks, and the best ways to protect against them.

We also invest in proactively detecting and removing harmful content before it is viewed or experienced by others. During the fourth quarter of 2023, 94% of all the servers that were removed were removed proactively. We’ve built specialized teams, formed external partnerships with industry experts, and integrated advanced technology and machine learning to keep us at the cutting edge of providing a safe experience for users.

Getting users engaged in safety

While rules and technical capabilities lay the foundation, users play a central part in making servers safe for themselves and others.

Our Community Guidelines clearly communicate what activities aren’t allowed on Discord. We warn, restrict, or even ban users and servers if they violate those rules. But we don’t want to spend our days chastising and punishing people, and we don’t want people to worry that they’re always at risk of being reported. That’s no way to have fun with friends.

Instead, we help users understand when they’ve done something wrong and nudge them to change their behavior. When people internalize the rules that way, they recognize when they, or someone else, might be breaking them. They discuss the rules organically, and in the process build a shared sense of what keeps their community safe and in good standing.

This has a multiplier effect on our work to build safer spaces: Users act with greater intention and they more proactively moderate themselves and their communities against unsafe actions. When communities have this shared commitment to the rules, they’re also more likely to report harmful activity that could put an otherwise healthy community at risk.

Changing the safety narrative

It’s sometimes said in technology that a platform can’t really reduce harm or block bad actors from doing bad things. Instead, those actions have to be addressed after they happen, when they’re reported or detected.

At Discord, we have loftier goals than that.

We actually want to reduce harm. We want to make it so hard for bad actors to use our platform that they don’t even try. For everyone else, we want to build products and provide guidance that make safety not just a technical accomplishment, but a cultural value throughout our company and the communities we support.

What is a teens’ rights approach?

A teens’ rights approach honors young people’s experience, including areas where they could use a little help—say, when they push boundaries just a little too far or succumb to peer pressure and engage in inappropriate behavior online.

The idea comes from the United Nations’ guidance on the impact of digital technologies on young people’s lives. For the report, committee members consulted with governments, child experts, and other human rights organizations to formulate a set of principles designed to “protect and fulfill children’s rights” in online spaces.

The committee also engaged those at the center of the report—young people themselves. The UN’s guidance was not only rooted in research, it treated teens with respect. It listened to them.

It's this kind of holistic and human rights approach that makes this body of work so influential, especially for child rights advocates. But it’s also become a guiding force for Discord.